[Warning: technical discussion]

Lately I've been spending some time exploring forecasts of short-duration extreme events on the seasonal forecast horizon, i.e. months ahead. Seasonal forecasts are usually presented in terms of the shift in probabilities for seasonal-average conditions, for example total rainfall or average temperature over the course of a summer, but it's interesting to consider whether we can say anything about the chance of short-duration extreme outcomes within that seasonal window.

To look at this problem, I'm using forecast data from the ECMWF and UK Met Office seasonal models, available via the EU's Copernicus service. The models produce daily data out to 6 months in the future, and as usual there is an ensemble of different outcomes to sample the uncertainty. Moreover, in addition to the realtime forecasts, the models are run in hindcast mode for 1993-2016, so that we can calculate things like model bias and model skill.

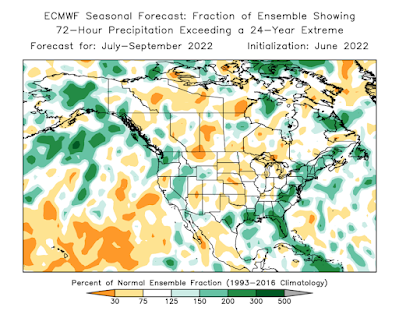

Here's the result that grabbed my attention and prompted this admittedly technical post: notice the wet signal over interior Alaska. Qualitatively, this shows an enhanced risk of an extreme 3-day precipitation event some time during the July-September period.

Bear with me on the explanation of this map, as it's not entirely simple. There are two steps involved in the calculation.

First, use the hindcasts to find the threshold for a once-in-24-year precipitation event in the model climate for this time of year. The 24-year recurrence interval is arbitrary, but it makes the calculation easy with 24 years of hindcast data and corresponding observations. On average, 1 in 24 model ensemble members show a 3-day precipitation event of this magnitude at some point in the July-September window.

Second, count the number of ensemble members in the current forecast that show 3-day precipitation above this threshold; and divide by 1/24 to get the increase in risk relative to the model climate. For instance, if 5/51 members show the extreme, then the risk is about 2.4 times normal (240% of normal on the map). If only a single member (1/51) shows it, then the risk is about 50% of normal. Of course, all of the probabilities are small: 5/51 members is still only a ~10% chance.

The spatial patterns in the map above are fairly consistent with the model's standard forecast for 3-month total precipitation, as you'd expect, although the extremes-focused calculation provides some interesting nuance. Here's the Copernicus map for July-September total precipitation anomaly, i.e. departure from normal.

The Climate Prediction Center's seasonal forecast (see below) also has a slightly enhanced probability of significantly above-normal precip for much of interior Alaska, and of course by itself this does imply an increased risk of a short-duration extreme event. It's certainly possible to see upper-tercile seasonal total precipitation without any particularly extreme events (e.g. summer 2015 in Fairbanks), but there's obviously a correlation in general.

How about the UK Met Office model? Interestingly this highly-regarded model also shows a signal for increased risk in the southern interior, although the forecasts are fairly dissimilar otherwise.

The burning question here, of course, is whether the models have any demonstrable skill in anticipating extremes. This is a very challenging question, because by definition we only have a single extreme outcome at each location in the 24-year hindcast history. Running any meaningful statistics requires aggregating the data over a large area, and therefore smoothing out potentially important local variations in the model skill.

We also have to reckon with the fact that gridded reanalysis data, which is typically used as "ground truth" in this kind of work, is undoubtedly deficient when it comes to representing short-duration precipitation extremes. A quick calculation based on ERA5 "observations" suggests that skill may be very marginal or non-existent over Alaska at this time of year, but it's actually possible that real-world skill is better.

In any case, it's an interesting result, and food for thought regarding the best way to make these forecasts. Let's see what happens in the next 3 months.

Richard is it possible to use a "Wayback machine" to predict and correlate what actually has occurred? For example our recent dry spell in Interior Alaska...can it be analyzed using the above models but prior to the event? Yes we can expect rain in the Fall that often becomes a gusher so I'm not surprised at the forecast.

ReplyDeleteYes, certainly one can go back and see what the models predicted, both in "real-time" and "hindcast" mode. Looks like the ECMWF and UKMO models both hinted at a dry April-May-June for interior Alaska, e.g.

Deletehttps://climate.copernicus.eu/charts/c3s_seasonal/c3s_seasonal_spatial_ecmf_rain_3m?facets=Parameters,precipitation&time=2022030100,744,2022040100&type=tsum&area=area06

https://climate.copernicus.eu/charts/c3s_seasonal/c3s_seasonal_spatial_egrr_rain_3m?facets=Parameters,precipitation&time=2022030100,744,2022040100&type=tsum&area=area06

Weak signals, however, and other models disagreed, e.g.

https://www.cpc.ncep.noaa.gov/products/NMME/archive/2022030800/current/usprate_Seas1.html

The CPC issued "equal chances" for precipitation:

https://www.cpc.ncep.noaa.gov/products/archives/long_lead/gifs/2022/202203month.gif

Yes the top 3 above seem to have called it well. The 4th looks like the typical model output for ENSO with a span of predictions. Finally the "equal chances" product takes a safe mode as usual. Good stuff. Thanks for the links.

Delete